20. December 2023 By Mykola Zubok

Data Evolution Chronicles: Tracing the Path from Warehouse to Lakehouse Architecture - part 2

In our exploration of the two fundamental pillars of the data realm - the data warehouse and the data lake - we concluded the discussion in Part One with the assertion that a hybrid approach could prove highly advantageous. So we have come to the next step in our journey.

Modern Data Warehouse or 2-tier architecture

As history shows, data lakes have not completely replaced relational data warehouses, but they have had their own benefits in staging and preparing data.

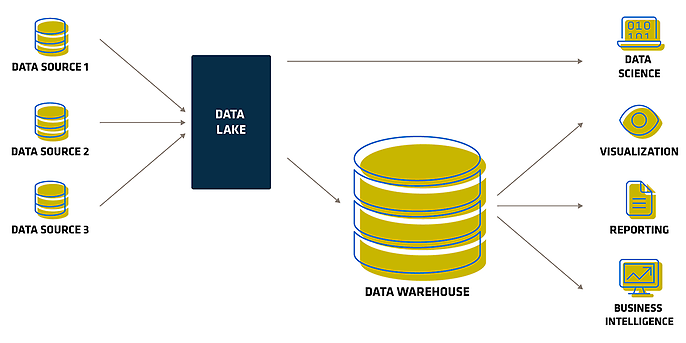

Since the mid-2010s, the two-tier architecture has dominated the industry. This involves using cloud storage services such as S3 or ADLS as the data lake and down-streaming data to the data warehouse, such as Redshift or Snowflake. In other words, it embodies a 'best of both worlds' philosophy: the data lake acts as a staging area for data preparation and helps data scientists build machine learning models. On the other hand, the data warehouse is dedicated to serving, ensuring security and compliance, and facilitating business users' query and reporting activities.

2-Tier Data Platform Architecture

While this enables BI and ML on the data in these two systems, it also results in duplicate data, additional infrastructure costs, security challenges and significant operational costs. In a two-tier data architecture, data is ETL'd from operational databases into a data lake. This lake stores data from across the enterprise in low-cost object storage, in a format compatible with popular machine learning tools, but is often not well organised and maintained. Next, a small segment of the critical business data is again ETL'd to be loaded into the data warehouse for business intelligence and data analytics. Because of the multiple ETL steps, this two-tier architecture requires regular maintenance and often leads to data staleness.

For all the benefits of data lakes and data warehouses, this architecture still leaves data analysts with an almost impossible choice: use current and unreliable data from the data lake, or use stale and high-quality data from the data warehouse. Due to the closed formats of popular data warehousing solutions, it is also very difficult to use the dominant open source data analysis frameworks on high quality data sources without introducing another ETL operation and adding further staleness. The next concept aims to address these issues.

Data Lakehouse

In late 2020, Databricks introduced the Data Lakehouse. They define a lakehouse as a data management system based on low-cost, in-memory storage that also provides traditional analytical DBMS management and performance features such as ACID transactions, data versioning, auditing, indexing, caching, and query optimisation. Lakehouses thus combine the key benefits of data lakes and data warehouses: low-cost storage in an open format accessible by a variety of systems from the former, and powerful management and optimisation capabilities from the latter.

Lakehouses are particularly well suited to cloud environments that have a clear separation between computing and storage resources. This separation allows different compute applications to run independently on dedicated compute nodes (e.g. a GPU cluster for machine learning), while directly accessing the same stored data. However, it's also possible to build a Lakehouse infrastructure using on-premises storage systems such as Hadoop Distributed File System (HDFS).

Why users need new solution

- 1. Data quality and reliability: Implementing correct data pipelines is difficult in itself, and two-tier architectures with a separate lake and warehouse add additional complexity that exacerbates this problem. For example, the data lake and warehouse systems may have different semantics in their supported data types, SQL dialects, etc.; data may be stored with different schemas in the lake and warehouse (e.g. denormalised in one); and the increased number of ETL/ELT jobs spanning multiple systems increases the likelihood of failures and errors.

- 2. Data freshness: More and more business applications require up-to-date data, but 2-tier architectures increase data staleness by having a separate staging area for incoming data before the warehouse and using periodic ETL/ELT jobs to load it. In theory, organisations could implement more streaming pipelines to update the data warehouse faster, but these are still more difficult to operate than batch jobs. Applications such as customer support systems and recommendation engines are simply ineffective with stale data, and even human analysts querying warehouses report stale data as a major problem.

- 3. Unstructured data: In many industries, much of the data is now unstructured as organisations collect images, sensor data, documents, and so on. Organisations need easy-to-use systems to manage this data, but SQL data warehouses and their APIs do not easily support this.

- 4. Machine learning and data science: Most organisations are now deploying machine learning and data science applications, but these are not well served by data warehouses and lakes. These applications need to process large amounts of data with non-SQL code, so they cannot run efficiently over ODBC/JDBC. As advanced analytics systems continue to evolve, giving them direct access to data in an open format will be the most effective way to support them.

- 5. Data management: In addition, ML and data science applications suffer from the same data management issues as traditional applications, such as data quality, consistency and isolation, so there is immense value in bringing DBMS capabilities to their data.

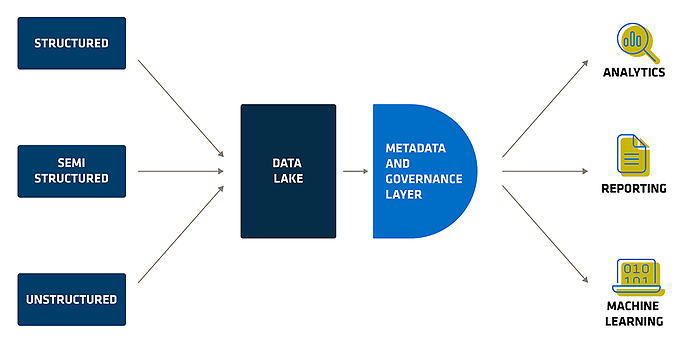

The idea behind a data lakehouse is to simplify things by using just one data lake to store all your data, rather than combining it with a data warehouse in one complex system. In the past, the data lake could not replace the functionality of the data warehouse. That's where emerging data formats such as Delta Lake and Apache Iceberg come in.

These formats provide a transactional layer that sits on top of an existing data lake and incorporates features similar to a relational data warehouse (RDW), improving reliability, security and performance. In essence, it can be thought of as a parquet file with additional metadata in JSON in the neighbouring folder. Schematically, a data lakehouse looks like a data lake with an additional metadata and governance layer.

Data Lakehouse at a glance

At this point, it might seem that the only difference between a data lake and a lakehouse is the use of Delta Lake or Apache Iceberg. However, the essence of a lakehouse goes beyond specific technologies. Rather, it is rooted in principles that deserve careful consideration at the planning stage. The Lakehouse concept is underpinned by seven fundamental principles, which we will explore in more detail.

- 1. Openness:The Lakehouse prioritises open standards over closed source technology, ensuring the longevity of data and facilitating collaboration through non-proprietary technologies and methodologies such as decoupled storage and compute engines.

- 2. Data Diversity:In a lakehouse, all data, including semi-structured data, is equally accessible, with both structured and semi-structured data given first-class citizenship, accompanied by schema enforcement.

- 3. Workflow Diversity:Users can interact with data in a lakehouse in a variety of ways, including notebooks, custom applications and BI tools, promoting multiple workflow options without restrictions.

- 4. Processing diversity:The lakehouse values both streaming and batch processing, and leverages the Delta architecture to integrate streaming and batch technologies into a single layer for comprehensive data processing.

- 5. Language Agnostic:While the lakehouse aims to support all access methods and programming languages, practical implementation, as seen in Apache Spark, involves extensive support for a variety of methods and languages.

- 6. Decoupling of storage and compute:Unlike traditional data warehouses, the lakehouse separates the storage and compute layers, providing flexibility to mix and match technologies, significant cost reduction with cloud object stores, and a more manageable scaling rate.

- 7. ACID transactions:Addressing a key limitation of data lakes, lakehouses incorporate ACID transactions, significantly improving reliability and efficiency by managing transactional data processing and ensuring the integrity of data operations.

While data warehouses have a long history and have evolved, they lack the adaptability to meet today's data processing needs. On the other hand, data lakes address many of the challenges but sacrifice some of the benefits of data warehouses. The emergence of the data lakehouse seeks to reconcile these differences by combining the strengths of both approaches to create a solution that incorporates the best features of each. The data lakehouse architecture is still in its early stages of development, requiring time to mature and establish best practices that are gradually being shared by early adopters. Meanwhile, data warehouses and data lakes continue to be deployed for specific use cases. In many cases, these two approaches coexist and complement each other effectively to address immediate challenges.

Conclusion

Data warehouses and data lakes were disruptive solutions to analytics challenges in their respective eras, designed to address prevalent problems in line with the technology landscape of their time. The lakehouse concept is a product of the contemporary landscape, reflecting a situation in which machine learning is being adopted not only by major industry players, but also by smaller companies. The concepts discussed here offer guidance on appropriate problem-solving approaches. Unfortunately, there is no one-size-fits-all solution. While it may seem that traditional data warehouses have become obsolete in today's data environment, this is not entirely true. Many organisations have not yet reached the appropriate level of data maturity to implement complex systems such as a lakehouse, and a simple data warehouse may be all they need. On the other hand, engineers often want to use the latest technologies and implement the latest architectures. It is therefore crucial for developers to understand the root of the problem and then look for an appropriate solution, rather than applying the most hyped concept to the situation.

You can find more exciting topics from the adesso world in our blog articles published so far.

Also interesting: