14. September 2023 By Julius Haas and Sebastian Brückner

Generative AI today: the real possibilities beyond the theoretical

Practical applications in Google Cloud

If you have read articles about generative AI recently, you have most likely come across questions like:„How far are we from achieving artificial superintelligence? And is AI going to put us all out of work?“

In this blog post, we want to avoid getting bogged down in philosophical questions and will instead seek to shed light on what is actually possible now with Google Cloud in the realm of generative AI. We will explain what can be done out of the box and why everyone’s job is not at risk from AI.

That people fear for their jobs is nothing new. When the photocopier and pocket calculator were introduced in 1963 and 1967, respectively, there were unfounded fears of being replaced by machines. If you are interested, feel free to browse the Pessimists Archive. It is worth a look and bound to provide for some amusing tales.

That being said, these and other questions are not completely unjustified and have been the subject of much debate since the rollout of ChatGPT and Bard. It is important for all of us to explore the potential risks and societal impacts of artificial intelligence.

Unfortunately, one key fact is currently being lost in this shuffle: generative artificial intelligence is already able to create real added value today. And with Vertex AI in Google Cloud, this is relatively easy to do.

What is generative artificial intelligence and how can I use it?

Let us take a couple of steps back first. Before we delve deeper into the matter, we should explain what we are actually talking about. Artificial intelligence enables computers to perform tasks that would normally require human intelligence. Generative AI allows users to create new text, audio and image files. What makes it stand out is not the new content it can create, but the fact that it also understands the information.

Generative AI is built on basic models such as GPT and PaLM, which have been trained on vast amounts of text that in turn allows them to compose, summarise or translate texts. But that is not all: they are also able to answer questions about the contents of a given text or PDF file. Especially in a business context, this opens up a host of opportunities as well as a number of use cases that can already be implemented today on a high level.

Here are a few examples:

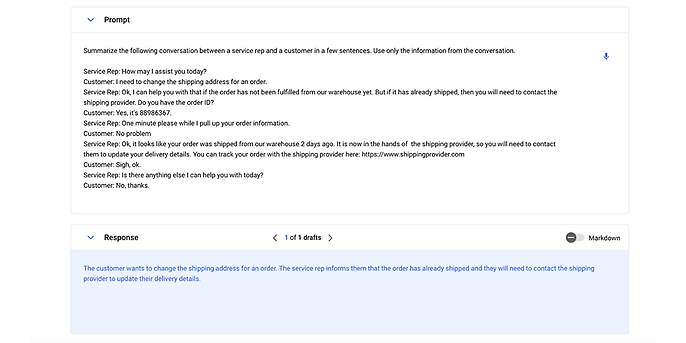

1. Support enquiries can be summarised on command:

2. Customer service chatbots can be implemented:

The full-feature package from the cloud:

With Vertex AI, Google Cloud offers a powerful platform that can be used to develop AI solutions of all sorts. It is based on the PaLM 2 large language models (Gecko, Otter, Bison, Unicorn) of various sizes. These can be accessed directly via prompts (instructions in the form of text or images) and used without model training and all the costs this entails. Another option is to use Chirp as the universal language model, Imagen for image generation or Codey for code generation and code completion. Model Garden also offers a variety of Google and open-source models such as Stable Diffusion, BERT and LLama2.

The models can be tuned in Generative AI Studio using the company’s own data for special use cases such as an internal virtual assistant. In this case, they are provided with training examples in the form of prompts and sample answers relating to the company, thereby training an additional layer of the model. But do not worry. This supplementary data will not be used to train the base model provided by Google Cloud. The company data never leaves the company. Once this is done, the trained model can then be readily deployed and used via Vertex AI managed endpoints.

To recap, Generative AI Studio allows you to prototype, test and optimise generative models quickly using your own data, meaning AI solutions can be implemented and are ready for use in no time.

What is possible out of the box?

In addition to providing the individual tools in Generative AI Studio, including the PaLM 2 large language model and other models for speech, video and audio, Vertex AI also offers out-of-the-box and modular features. After giving you a basic preview of Vertex AI, let us now take a closer look at two of these features.

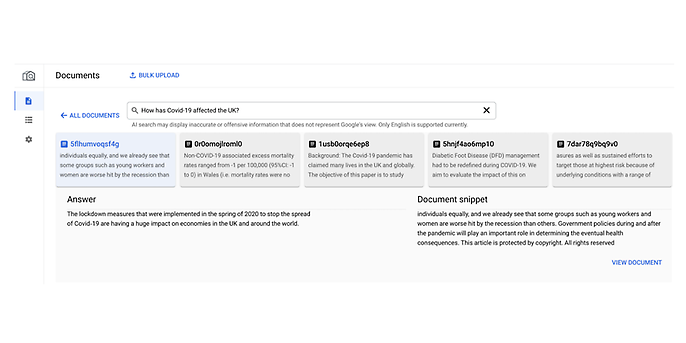

Work intelligently on the intranet: Google Cloud’s Document AI Warehouse with GenAI search

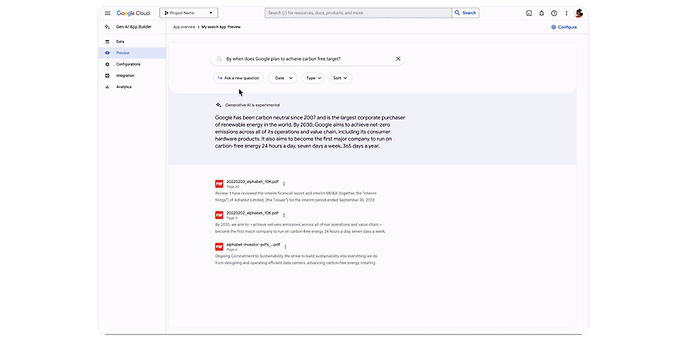

A popular use case at many companies is document searches in the intranet. However, searching through documents manually is often tedious and time-consuming. Many users would like an easier way to find the information they are looking for. To meet this need, Google has announced the integration of generative AI into Document AI Warehouse, the central platform for smart document management and processing in Google Cloud. This also includes optical character recognition (OCR) in documents, including images in PDFs.

Once GenAI has been integrated, users will be able to post their questions about the documents in Document AI Warehouse, which are then answered by Google’s generative language models on the basis of these documents. If the question pertains to multiple documents, up to 25,000 words can be analysed in the background. In addition, excerpts from the documents used to generate the response are provided along with the answer. It is also possible to go directly to the relevant document.

One interesting use case is the integration of generative AI into the intranet, which will enable customer service representatives to answer support requests more quickly and efficiently and make it even easier for customers to access to relevant product information via natural language searches.

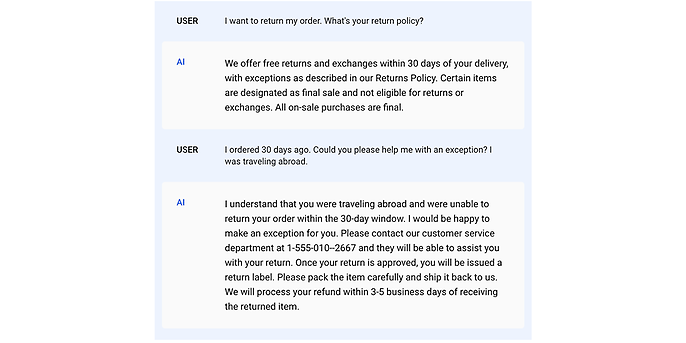

Vertex AI Search and Conversation (or Gen App Builder, as it is also known)

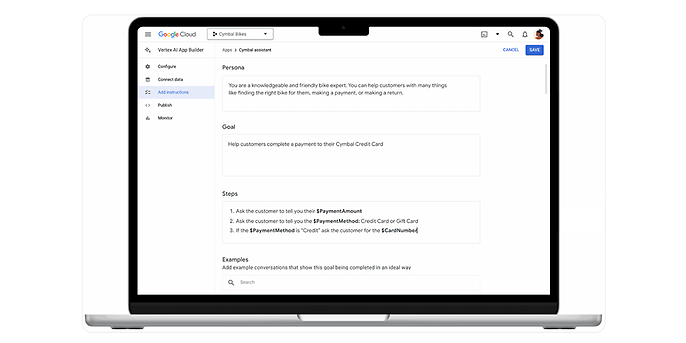

To abstract the complexity of generative search solutions, Google has released a further product, that being Vertex AI Search and Conversation. This solution is designed to serve as a simple orchestration layer between the basic models already described above and the enterprise data. This means that even developers who lack years of machine learning experience can build corresponding smart applications that go beyond a simple document search (think GenAI search) in Document AI Warehouse.

Gen App Builder, Quelle: https://cloud.google.com/blog/products/ai-machine-learning/vertex-ai-search-and-conversation-is-now-generally-available

For example, in addition to a custom search engine, a chatbot can be quickly created based on text and audio input that can perform queries and interactions in the underlying data. It is possible to define whether the ‘knowledge’ of the foundation model should be included in the response alongside the company’s enterprise data.

A special feature is that a chatbot like the one described here is not only laid over the dynamically generated outputs of basic models, but it can also be combined with rule-based processes. This leads to more reliable results, which in turn allows tasks to be assigned to the chatbot. In practice, for example, an appointment could be made directly in the chat in natural language.

Beispiel für einen Chat, Quelle: https://cloud.google.com/blog/products/ai-machine-learning/vertex-ai-search-and-conversation-is-now-generally-available

Applications like this can already be created right on the UI in Vertex AI Search and Conversation.

Integration and outlook

If you are worried about being made redundant after having just read about all the many exciting possibilities described here, you can rest assured. The main challenge is and continues to be the integration of the new generative AI features into the company’s product and the associated development of robust applications. Although the responses generated by large language models are surprisingly accurate, eloquent and insightful in most situations, they can produce unexpected answers in some cases. Like, for instance, harmful content (such as instructions on how to make a bomb) or outputs that are structured in an unexpected manner. If we trust the answers and want to parse them directly, we will inevitably be reliant on the stability and availability of the large language models, as will our applications.

This might be a little off-topic but here we go:

Just how problematic this can be was recently demonstrated in a paper titled „How Is ChatGPT’s Behavior Changing over Time?" by researchers at Stanford University and the University of California at Berkeley.

As the paper describes, there are dramatic differences between the March and June versions of GPT-4 and GPT-3.5 in some cases. For example, the code generated by GPT-4 could be run directly in 52 per cent of cases in the version released in March, while two months later this was only possible 10 per cent of the time. The accuracy of the responses to mathematical queries has also decreased significantly in certain cases with GPT-4. The question of ‘how many happy numbers are there in [7306, 7311]?’ was originally answered correctly 83.6 per cent of the time. Recently, however, this figure has fallen to just 35.2 per cent. However, over the same period, the responses given by GPT-3.5 to the same question have become more accurate, having increased from 30.6 to 48.2 per cent.

This indicates a continued need for machining learning tools and software engineers to closely monitor model performance and, above all, model behaviour to ensure the process runs smoothly. That is because if the response behaviour and the precision of the models change all the time, our applications, which are based on these models, must also be constantly adapted.

Do you have any other questions about generative AI, machine learning, language models or Google Cloud in general? What are the challenges you face in the area of AI? Let us talk. You can learn more about our partnership with Google Cloud and use cases we have implemented on our website. Feel free to get in touch with us.