17. August 2023 By Mykola Zubok

Open table formats in the modern data analytics landscape

In the ever-evolving landscape of big data processing and analytics, Apache Hudi, Iceberg and Delta Lake have emerged as powerful tools, revolutionising the way modern data platforms handle data management and analysis. Each of these technologies brings a unique set of features and capabilities to the table, all designed to address the challenges posed by large-scale data operations.

In this blog, we will delve into the core functionalities of these data types, revealing their unique strengths and applications. By understanding how these innovative solutions complement and differ from each other, we aim to shed light on their optimal usage in building robust and efficient modern data platforms.

Definitions

Delta Lake is a powerful open-source storage layer designed to enhance the capabilities of existing data lake infrastructures, which are typically built on top of object stores like Azure Blob Storage. By adding features like ACID transactions and versioning to the data stored within these systems, Delta Lake empowers data engineers and data scientists to construct data pipelines and data lakes that are not only highly scalable but also exceptionally dependable. This combination of reliability and scalability makes Delta Lake a valuable tool for organisations seeking to optimise their data management and analytics processes.

Iceberg

Iceberg, as an open-source table format, aims to facilitate the efficient and scalable access of extensive datasets within data lakes. It achieves this by offering a table schema that seamlessly integrates with popular data processing tools like Apache Spark. Additionally, Iceberg provides crucial features such as ACID transactions, versioning and data evolution, further enhancing its utility for data engineers and analysts. With these capabilities, Iceberg empowers users to work with large datasets effectively while ensuring data integrity and accommodating changes in data structure over time.

Hudi

Hudi, short for Hadoop Upserts Deletes and Incrementals, is an open-source framework tailored for data storage and processing. Its primary focus is on enabling real-time data access and analytics. The framework is equipped with essential features like ACID transactions, incremental data processing and efficient data indexing. These attributes make Hudi a perfect fit for use cases involving streaming data processing and real-time analytics. With Hudi, organisations can efficiently manage and analyse their data in real time, allowing for quicker insights and more responsive decision-making processes.

Common features

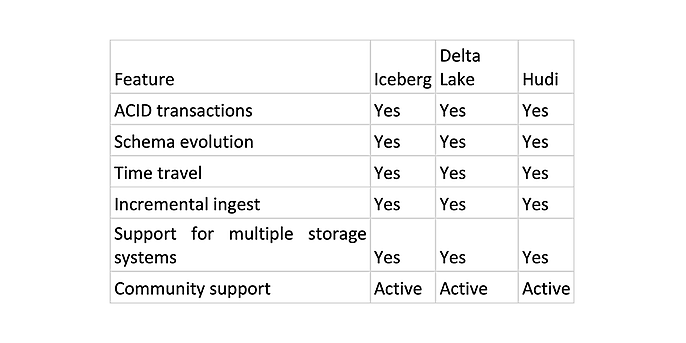

These data types have common features, and in some use cases, they can be interchangeable. The following table depicts the shared key functionalities.

Key functionalities of Iceberg, Delta Lake and Hudi

- ACID transactions ensure that your data is always consistent, even in the event of failures. This is important for data lakes that are used for business-critical applications.

- Schema evolution allows you to change the schema of your data lake tables without having to recreate the tables. This is important for data lakes that are used to store historical data.

- Time travel allows you to retrieve historical versions of your data lake tables. This is important for data lakes that are used for auditing and compliance purposes.

- Incremental ingest allows you to load new data into your data lake tables without having to reprocess the entire dataset. This is important for data lakes that are used to ingest large amounts of streaming data.

- Support for multiple storage systems allows you to store your data lake tables in a variety of storage systems, such as Amazon S3, Azure Blob Storage and Google Cloud Storage. This gives you flexibility in choosing the storage system that best meets your needs.

- Active community support means that there are a lot of people who are using and contributing to the project. This means that you are more likely to find help if you need it.

Performance

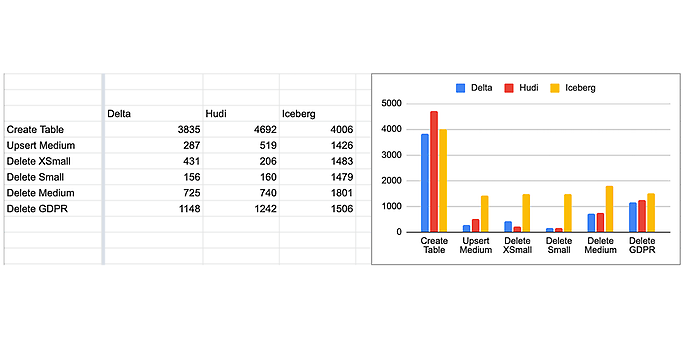

According to the benchmarks (https://brooklyndata.co/blog/benchmarking-open-table-formats, https://github.com/brooklyn-data/delta/pull/2), the following performance values are available:

Performance comparison. Source: https://github.com/brooklyn-data/delta/pull/2

As we can see in the charts and graphs above, in general, Delta Lake performs much better than Iceberg and a bit better than Hudi. When comparing performance, however, it is important to bear in mind that Delta and Iceberg are optimised for append-only workloads, while Hudi is by default optimised for mutable workloads. By default, Hudi uses an ‘upsert’ write mode which naturally has a write overhead compared to inserts. Without this knowledge you may be comparing apples to oranges.

Integration with different platforms

By understanding the implications of harmonising data types, we can unlock the true potential of cloud-based analytics, streamline data workflows and foster better collaboration between teams.

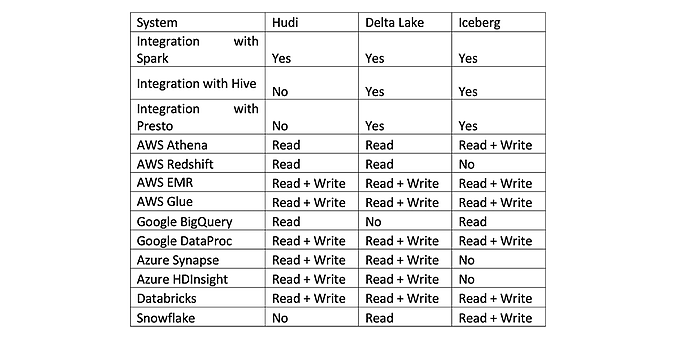

These systems support Hudi, Delta Lake and Iceberg

As we can see, not every platform supports every data type. For instance, Google BigQuery, a popular cloud-based analytics platform, lacks direct integration with Delta Lake. Similarly, Amazon Redshift and Azure Synapse Analytics do not offer built-in support for Iceberg. Furthermore, Snowflake, renowned for its cloud-based data warehousing capabilities, does not provide native support for Apache Hudi.

Which one to use

To build a data lake, consider Delta Lake and Hudi for their compatibility with data lake infrastructures like S3 or Azure Storage. For a data warehouse, Iceberg is a preferable option, excelling in efficient query performance. For real-time data access and analytics, Hudi is the ideal choice. If batch processing is what you need, Delta Lake and Iceberg offer robust support for data pipelines. All three formats – Delta Lake, Hudi and Iceberg – provide ACID transactions and data versioning, with Delta Lake standing out for its exceptional support in these areas. When dealing with evolving data structures over time, Iceberg is the optimal choice due to its robust support for schema evolution and versioning.

Delta Lake, Hudi and Iceberg provide solid integration with widely used data processing tools such as Apache Spark, Python or Hadoop. However, it is essential to carefully consider the project’s tech stack and future plans, as some major vendors may lack support for certain formats.

You can find more exciting topics from the adesso world in our blog pots published so far.