22. May 2025 By Paula Johanna Andreeva and Sarah Fürstenberg

The EU AI Act: What companies should know and do now

What is the EU AI Act?

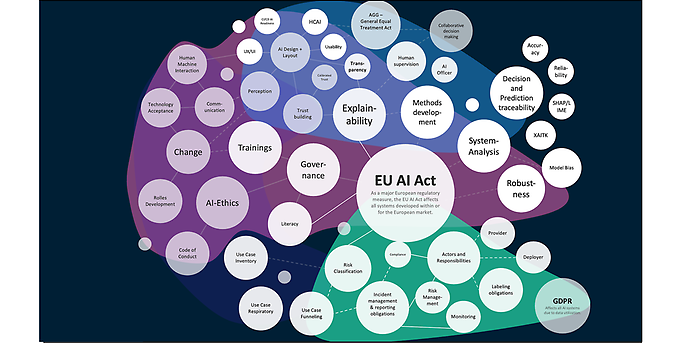

The EU AI Act is the first comprehensive legislation worldwide that establishes rules for artificial intelligence (AI) with concrete enforcement mechanisms. The aim is to promote AI systems that combine innovation with trustworthiness while respecting fundamental rights and European values. Beyond the defined responsibilities, the Act therefore brings much more to the table and can be used as a springboard to make companies future-proof in the field of AI. A summary of the relevant topics can be found here:

The EU AI Act and trustworthiness

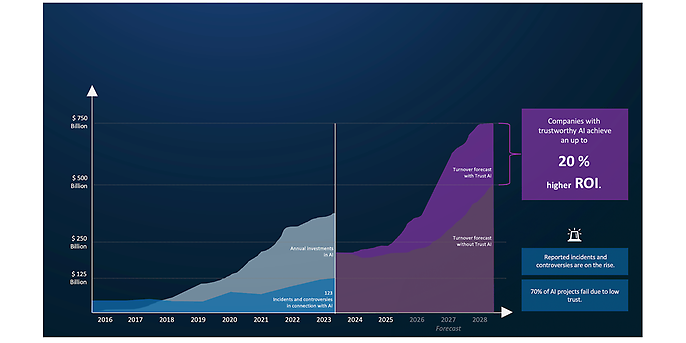

The EU AI Act makes “trust by design” a regulatory requirement. Trustworthy AI is not an add-on, but a prerequisite for sustainable value creation. Trustworthy AI is the responsible development and implementation of AI technologies that prioritize transparency, fairness, and reliability in order to promote acceptance in line with social values and legal standards. According to conservative estimates, adesso assumes that companies that rely on trustworthy AI achieve up to 20 percent higher ROI.

Every AI project is also a Trust AI project! Potential opportunities through Trustworthy AI

Key components of the EU AI Act

Definitions and scope

The EU AI Act defines AI systems as software that generates results and influences decisions through machine learning, logic-based or statistical methods. It applies to providers, users, distributors, and importers—provided that AI systems are placed on the EU market or used within the EU.

Actors and responsibilities

The EU AI Act distinguishes between different roles:

- Providers: Develop and distribute AI systems. They bear the main responsibility for compliance.

- Deployers: Use AI professionally, for example in HR, justice, or medicine. They must have adequate knowledge and use systems correctly.

- Importers and distributors: Must ensure that only compliant products are placed on the market.

- Notified bodies and authorities: Carry out checks, monitoring, and sanctions. In Germany, the Federal Network Agency (market surveillance), the Data Protection Conference (fundamental rights protection), and DAkkS (certification supervision) are among those responsible for key tasks.

Risk classification system

The EU AI Act is based on a risk-based regulatory approach that classifies AI systems into four categories: unacceptable, high, limited, and minimal.

Unacceptable risk: Prohibited systems, such as manipulative technologies or social surveillance.

- High risk: For example, AI in critical infrastructure, education, and the justice system. Requirements such as risk management, transparency, and human control apply here.

- Limited risk: For example, chatbots.

- Minimal risk: Among other things, spam filters, without regulatory intervention.

Systems with unacceptable risk, such as manipulative technologies or social surveillance, are completely prohibited. High-risk systems are subject to strict requirements that include risk assessment, data quality management, human oversight, and conformity assessment.

Generative AI with adesso

From idea to scalable solution

Whether strategic consulting, tailored use cases, or customized implementation, adesso supports companies on their path to successfully using generative AI. With in-depth industry expertise, technological excellence, and a clear focus on business value, we develop AI solutions that create real added value.

To-dos for companies

To ensure timely compliance, we advise organizations to take the following measures:

1. Take inventory: Create an overview of the AI systems you use

- An AI inventory is a central overview of all AI systems used in a company. It documents areas of application, data sources, algorithms, and potential risks. A repository, on the other hand, is a structured database that stores technical details, documentation, and compliance evidence for these systems. Both tools are essential for the EU AI Act, as they create transparency, facilitate compliance with legal requirements, and support companies in their risk assessment. This enables efficient management and tracking of AI systems as well as proactive compliance with the provisions of the EU AI Act.

2. Classify: Assess risks and assign systems

- The use of models such as CapAI and the NIST Risk Management Framework can help companies holistically assess risks and maximize the financial value of AI use cases. CapAI was developed to verify the compliance of AI systems with the EU AI Act and provides clear guidelines for identifying regulatory, ethical, and technical risks at an early stage. This helps companies develop strategies that not only minimize risks but also increase the value of their AI applications. The NIST framework complements this approach by integrating technical and organizational risks throughout the entire AI lifecycle. It provides a structured framework for analyzing and managing vulnerabilities in a targeted manner, which increases the efficiency of investments in AI use cases. By combining both approaches, companies can make informed decisions that ensure compliance and maximize the long-term financial benefits of their AI systems.

3. Document: Create technical documentation and risk analyses

- Effective documentation first covers all regulatory requirements, such as the creation of technical documentation, risk assessments, and ensuring the traceability of decisions. This ensures compliance and minimizes legal risks. This includes analyzing the costs of each use case—for example, by evaluating efficiency gains, cost savings, or revenue increases. By linking compliance requirements to a strategic benefit analysis, companies can not only minimize regulatory risks, but also secure the long-term profitability of their AI investments.

4. Strengthen governance: Define internal responsibilities and processes

- Clarifying responsibilities for AI systems goes beyond the regulatory requirements of the EU AI Act and is crucial for the efficient implementation and economic success of AI initiatives. Clear roles promote collaboration and minimize risks, as responsibilities for development, deployment, and monitoring are clearly defined. The hub-and-spoke model is a proven approach here: a central hub sets standards and guidelines, while decentralized spokes implement and adapt specific AI use cases. This model promotes flexibility and scalability and supports change management to deal with technological and organizational changes. This not only ensures compliance, but also maximizes long-term business value.

- The AI officer plays a central role: they ensure compliance with regulatory obligations, coordinate risk assessments, establish governance structures, and embed ethical principles in the AI lifecycle. In addition, this person can support communication with stakeholders and drive the strategic integration of AI applications. In addition, an AI ethics advisory board can serve as an advisory body to provide additional transparency and oversight.

5. Offer training: Raise awareness among departments and management

- AI training is much more than just a measure to comply with the EU AI Act. It enables employees to overcome their fears of working with AI and recognize the potential of the technology. It is particularly important that such training enables employees to independently develop innovative AI use cases. This promotes a culture of initiative and opens up new business opportunities. At the same time, a better understanding of the technology ensures that AI systems are used safely and efficiently, which increases the long-term success of the company.

6. Use sandboxes: Test new systems under supervision

- Development sandboxes and AI regulatory sandboxes have different purposes and target groups, but both are essential for safe and innovative AI development.

- Coding sandboxes provide developers with isolated environments to test code risk-free and identify technical errors at an early stage. They promote creativity and technical innovation without jeopardizing existing systems.

- AI regulatory sandboxes, on the other hand, are regulated environments mandated by EU member states where AI systems are tested under supervision to ensure their compliance with the EU AI Act. These sandboxes aim to minimize risks to fundamental rights, health, and safety while promoting innovation.

Ready for the next steps?

The EU AI Act presents many companies with new challenges – but also with great opportunities. adesso supports you in exploiting this potential safely and strategically. Let's work together to ensure that your AI initiatives are not only legally compliant, but also future-proof.

Deadlines

Implementation will take place in stages:

- Since February 2, 2025: Ban on AI systems with unacceptable risk.

- 2025–2026: Gradual entry into force of regulations on GPAI, high-risk systems, governance, and transparency.

- By early 2026: Companies must have implemented training, risk analyses, and documentation requirements.

The deadlines vary depending on risk class and role, so early preparation is crucial to avoid implementation pressure and fines.

Conclusion: Act now to be prepared

The EU AI Act is more than just regulation; it is a signal for responsible innovation. Companies that act now will not only ensure compliance, but also build trust and strategic added value. We are happy to support you, from gap analysis and training concepts to audit preparation.