20. February 2026 By Dr. Michael Peichl

Agent Engineering statt Spielerei: Der Weg zu sicheren KI-Anwendungen mit Mosaic AI

The first encounter with generative AI usually follows the same pattern in companies. Initial fascination with the eloquent language skills of models such as ChatGPT is quickly followed by disillusionment in a business context. When complex technical questions are asked, the verdict of the specialist departments is often: ‘That sounds good, but the content is not reliable.’

This is precisely where adaptation stagnates in many German companies. They are stuck in the pilot phase. There are numerous experiments, but hardly any productive applications. The reason is simple: in German SMEs, reliability is non-negotiable. If an AI system outputs incorrect maintenance intervals or ‘hallucinates’ spare parts, this creates operational and legal risks that no management can take.

The location advantage: innovation within the European legal framework

The timing for scaling is ideal, both technologically and strategically. Digital sovereignty is no longer just a buzzword, but can be implemented architecturally. Thanks to modern platforms, data remains physically in EU regions, while central governance layers do not just check compliance (e.g. with regard to NIS-2 or the EU AI Act) at the end, but enforce it technically. This enables legally compliant innovation right from the start – a topic we have already highlighted in our Security Deep Dive.

The misunderstanding: eloquence does not equal competence

In order to develop reliable agents, the functioning of large language models (LLMs) must be demystified. These models are statistical machines, not knowledge databases. They merely optimise the probability of the next word. This also enables them to formulate factually incorrect statements with a high degree of persuasiveness.

The model can be thought of as a theorist who has memorised all the manuals but has never worked on the machine himself. If he lacks the specific contextual knowledge to solve an acute problem, he improvises. However, this does not mean that the technology is useless. It simply means that we need to ‘guide’ AI systems technically – through validated data, clear guidelines and continuous quality testing.

Note: If you would like to delve deeper into the organisational tension between the autonomy of AI agents and the necessary control mechanisms, I recommend reading the article by my colleagues, ‘Agentic AI: Efficiency gains, autonomy & questions of control’.

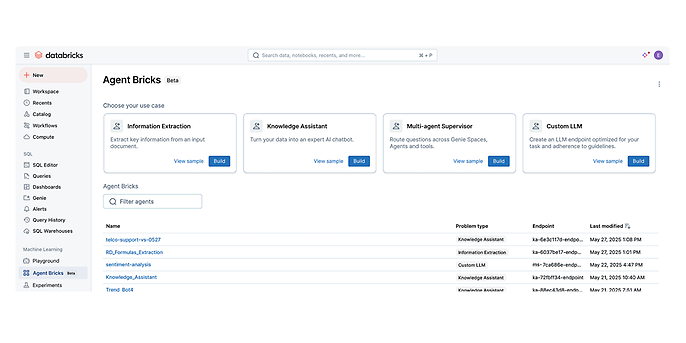

Practical check: From chatbot to process agent

The technical answer to the hallucination problem is RAG (Retrieval Augmented Generation). This forces the model to generate answers based exclusively on the company documents provided. The Mosaic AI Agent Framework and Agent Bricks from Databricks standardise this process. Agent Bricks accelerates development with ready-made and evaluated patterns.

Experience shows that three scenarios deliver quickly measurable benefits:

1. The Knowledge Assistant (The Expert)

Instead of manually searching through documents, the maintenance team asks, ‘How is module X calibrated according to manual 2024?’ The agent provides the answer, including a source reference to the page and paragraph. This massively reduces search times. However, this requires cleanly prepared data and strict access controls.

2. The Information Extraction Agent (The Clerk)

There is no dialogue here. The agent processes unstructured inputs such as invoices or contracts in the background and transfers relevant facts into structured tables. The result is an automated audit trail that replaces manual data entry.

3. The Supervisor Agent (The Manager)

In so-called ‘compound AI systems’ (The Shift from Models to Compound AI Systems – The Berkeley Artificial Intelligence Research Blog), a higher-level agent delegates tasks. A customer request is analysed and distributed to specialised tools (e.g. address data from CRM, conditions from SAP). In critical areas, the agent does not make the final decision, but prepares decisions for humans (human-in-the-loop).

Source: Agent Bricks Agent Bricks | Databricks

Quality assurance as an engineering discipline

A chatbot that performs well on five test questions is still a long way from being ready for production. How does the system behave when faced with hundreds of simultaneous queries and rare edge cases? In professional software engineering, hope is not a strategy.

We therefore establish three safety nets in the architecture:

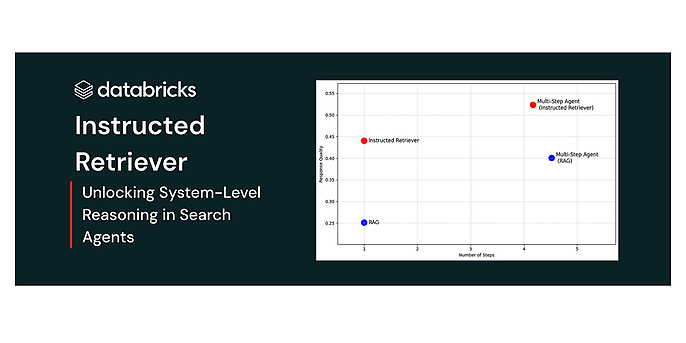

- 1. Precise retrieval (Instructed Retriever & Hybrid Search (Instructed Retriever: Unlocking System-Level Reasoning in Search Agents | Databricks Blog):Pure vector search is often too imprecise for technical details. If a specialist searches for item number ‘X-900’, a document that is semantically similar to ‘X-800’ is useless. Databricks therefore combines classic keyword search (for hard facts) with vector search (for context). The Instructed Retriever better understands the search intention, while a reranking model filters the hits again before generating the answer.

- 2. Automated evaluation (Mosaic AI Agent Evaluation (Introducing Enhanced Agent Evaluation | Databricks Blog):

We don't deploy code without unit tests – the same must apply to AI. With Agent Evaluation, we run the system against a defined test data set (golden set). An ‘LLM-as-a-Judge’ automatically evaluates the answers according to criteria such as correctness and source reference. This gives us objective quality metrics instead of subjective assessments. - 3. Up-to-date information through vector search:

Outdated knowledge is a risk. If documents or prices change, the agent must know this immediately. Mosaic AI Vector Search (Mosaic AI Vector Search | Databricks on AWS) uses Delta Sync to automatically keep the search index up to date as soon as the underlying data in the lakehouse changes.

Source: Instructed Retriever: Unlocking System-Level Reasoning in Search Agents | Databricks Blog

Strategic independence: Open Source First

Many companies are currently heavily dependent on the models of individual US providers. This poses risks in terms of pricing, availability and data protection. With Mosaic AI, Databricks enables an ‘Open Source First’ approach (for example, with Llama 3 or DBRX).

This offers you three advantages:

- Data protection: Inference runs in your own cloud environment, no data leaves your controlled area (https://www.databricks.com/product/machine-learning/mosaic-ai-training: secure and compliant).

- Customisability: With Mosaic AI Model Training (https://www.databricks.com/product/machine-learning/mosaic-ai-training), open models can be retrained (fine-tuned) with your own company terminology, which often increases precision more cost-effectively than huge generic models.

- Control: You retain sovereignty over the ‘brain’ of your application.

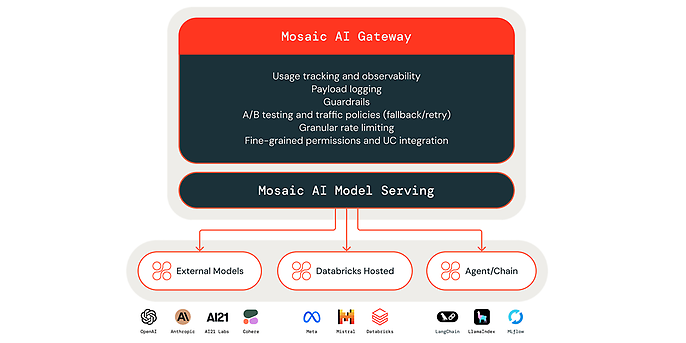

The gateway as a governance firewall

Technically, access is controlled via the Mosaic AI Gateway (https://www.databricks.com/blog/new-updates-mosaic-ai-gateway-bring-security-and-governance-genai-models). It acts as a central interface that monitors all requests to models. This is where policies for rate limiting, access control and filtering of sensitive data (PII) are enforced. In view of regulations such as the EU AI Act, this central logging and control is essential.

Source: Mosaic AI Gateway https://www.databricks.com/blog/new-updates-mosaic-ai-gateway-bring-security-and-governance-genai-models

Conclusion: Out of the lab

We are at a turning point. The technology is ready for productive use. The challenge is no longer feasibility, but rather clean integration into existing processes and ensuring governance. With the Mosaic AI Agent Framework and open models, we are transforming data from a passive archive into active knowledge.

But be careful: if you build a lot of agents, you will soon have a lot of data pipelines. In the next article, we will explain how to keep this new toolbox maintainable.

Your AI, your rules

Are you tired of proof-of-concepts that don't scale? At adesso, we support you in making the transition from the laboratory to productive value creation. We implement RAG systems based on open standards – secure, measurable and value-adding.