5. February 2026 By Dr. Michael Peichl

The best of both worlds: SAP process knowledge meets the AI power of Databricks

In business practice, the distribution of roles is usually set in stone: the SAP system acts as the ‘system of record’ – it is the authoritative source of truth for transactions. But when it comes to using this data for generative AI, predictive maintenance or the analysis of unstructured data, the classic SAP architecture often reaches its limits.

Why use Databricks for SAP data at all?

The answer lies in specialisation: while SAP is unrivalled when it comes to process stability and structured accounting data, Databricks provides the necessary environment for modern machine learning – from GPU-based training and Python-based data science to hosting open LLMs. The challenge until now has been to connect these two worlds without creating massive data silos.

Until now, the only way to do this was to build extensive ETL pipelines. In concrete terms, this meant high development costs, rising storage costs due to duplicates and – worst of all – system-related latency. If the report is not available until the next day, departments often make decisions based on outdated figures.

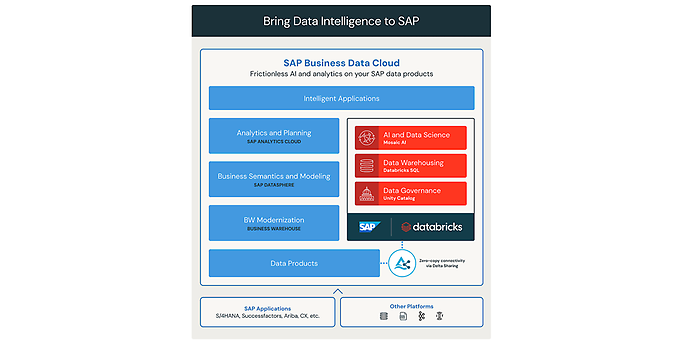

The technological landscape is currently undergoing massive change. The partnership between SAP and Databricks is providing new integration patterns that eliminate this latency architecturally and apply the AI strengths of Databricks directly to SAP data.

The technological lever: logical access instead of physical copies

The solution lies in the virtualisation of data access. Technically enabled by the open Delta Sharing protocol (Databricks Delta Sharing - Data Sharing | Databricks), the nature of data provision is fundamentally changing.

Instead of physically extracting, transporting and re-storing data, the Databricks Lakehouse simply accesses the data products in the SAP Datasphere logically. For data scientists and analysts, it looks as if the data is stored locally in the Lakehouse – but physically, it remains securely in the SAP system.

Zero-copy architecture / delta sharing, source: Introducing SAP Databricks | Databricks Blog)

Architectural decision: virtualisation vs. ingestion

This offers two key architectural advantages:

- 1. Avoidance of redundancy: We significantly reduce storage and egress costs as there is no need to set up duplicate data storage for pure analysis purposes.

- 2. Preservation of semantics: We don't just extract cryptic technical tables such as KNA1 (customer master) or MARA (material master), but also access the semantic models. The business logic and relationships between the data objects are preserved and do not have to be laboriously reconstructed in Databricks.

- 3. Real-time instead of ‘yesterday's data’: Since there is no longer any physical copying process, your analyses always work on the most up-to-date data. The latency between posting in SAP and visibility in the dashboard drops to zero.

The implementation strategy: virtualisation or ingestion?

Databricks provides the technology, but the architecture decision lies in the implementation. There is no ‘one-size-fits-all’ solution. In modern projects, therefore, a strict distinction is made according to the use case:

- The virtual path (zero copy): This is the preferred standard for business intelligence and ad hoc analyses. If a controller wants to see the current turnover, virtual access is ideal: fast, up-to-date and cost-efficient.

- The physical path (Lakeflow Connect): There are scenarios in which virtualisation reaches its limits – for example, when training complex AI models that require historical snapshots or generate massive I/O loads. This is where native connectors via Lakeflow are used to transfer data incrementally and transaction-securely (ACID) to Delta Lake.

The art of engineering lies in combining these two approaches in a hybrid architecture in such a way that you get the best of both worlds.

Security across system boundaries

The technical opening up of SAP data often raises concerns among security managers. To prevent governance gaps from arising, the Unity Catalog acts as a central control instance.

The advantage of this architecture is synchronisation: security policies and metadata can be mapped across system boundaries. This creates a consistent data lineage. It is technically possible to prove which SAP data record flows into which AI model or dashboard. Access rights are enforced centrally, regardless of whether access is via Python code or SQL query.

We will take a closer look at how these governance requirements can be implemented in practice in the next part of the series: ‘Unity Catalog in practice: How to finally combine data democracy and German compliance’.

The payoff: strategic added value and operational excellence

Why do we go to all this architectural effort? The answer is twofold: On the one hand, you realise strategic advantages that would not be possible in a pure SAP world. Second, you offer the specialist departments completely new operational tools.

1. The strategic lever: Why connect SAP with Databricks?

The combination of both platforms creates synergies that go far beyond pure reporting:

- Structured meets unstructured (the 360° view): SAP is unrivalled when it comes to structured transaction data. Databricks is the leader in unstructured data (PDFs, images, IoT logs). Only when you link SAP master data with this information does the context for true AI emerge – for example, when an agent not only knows about the loss of revenue, but also analyses the angry email correspondence from the customer.

- Time-to-value for AI: Your data scientists get instant access to production data in an environment optimised for machine learning (Python, GPUs, LLMs). With no complex extraction projects, AI pilots can be tested in days instead of months.

2. The operational benefit: Two paths to AI assistance

Once the foundation has been laid, static reports can be replaced by intelligent assistants. Depending on the complexity of the requirement, there are two paths to choose from:

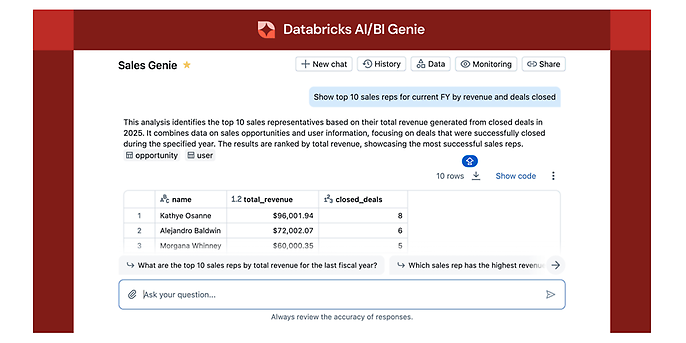

- Path A: Ad hoc analysis with AI/BI Genie

For classic business questions, specialist departments use AI/BI Genie (AI/BI Genie is now Generally Available | Databricks Blog). They interact directly with the data: ‘Analyse the margin development of the “machine components” product group in Q3 and show me the reasons for the deviation.’

The system not only provides text, but also automatically creates appropriate visualisations, recognises complex relationships and learns from feedback. The advantage: genuine self-service analytics without IT having to build a new report for every question.

Source: Databricks AI/BI Genie, AI/BI Genie is now generally available | Databricks Blog )

- Path B: The customised path (custom agents)

Genie is the entry point. If specific process logic is required – for example, if the assistant is not only supposed to read, but also control complex workflows or write back to systems – the Mosaic AI Agent Framework offers the freedom to develop highly specialised agents.

You can read in detail about how such agents are professionally developed and evaluated beyond standard tools in the fourth part on the topic of ‘Agent engineering instead of gimmicks: The path to secure AI applications with Mosaic AI’.

Trust through the semantic layer

One risk with generative AI is ‘hallucinations’ – for example, when the AI calculates revenue incorrectly. A language model does not inherently know the company-specific definition of “EBITDA” or ‘contribution margin’.

To prevent this, metric views (Unity Catalog metric views | Databricks on AWS) are implemented in the Unity Catalog. Here, the calculation logic is stored once and for all as a ‘single source of truth’. When the AI agent responds, it is technically required to access these certified formulas.

The result: reliable figures that match the official SAP reports.

Conclusion

Modern data architecture resolves the historical dilemma between stability (SAP) and innovation (Databricks). There is no longer any need to choose between the two worlds. The intelligent combination via zero-copy creates an infrastructure that is agile enough for AI without compromising the integrity of the master data.

We support you!

Technology is only as good as its implementation. We support you in finding the right balance between virtualisation and ingestion. Let's work together to see how your SAP data can be transferred into the age of AI agents in a way that adds value and is secure.