8. February 2024 By Azza Baatout and Marc Mezger

Hydra - Professional Configuration management for AI Projects

In an era where software development complexities are escalating, efficient management of project configurations has never been more vital. Hydra emerges as a beacon of innovation, promising to revolutionize how we handle these configurations with unprecedented flexibility and precision. Our upcoming blog delves deep into the world of Hydra, exploring its dynamic capabilities and how it's redefining project management in software development. From its key features that set it apart from traditional tools to a comprehensive guide for getting started, this blog is your gateway to mastering Hydra. Stay tuned as we uncover the transformative power of Hydra in streamlining project configurations.

Introduction to Hydra

In the complex and ever-evolving landscape of software development, managing project configurations efficiently is a challenge that professionals continuously face. Amidst this backdrop, Hydra emerges as a powerful tool designed to streamline and simplify the configuration process.

Developed with the modern needs of software projects in mind, Hydra offers a solution to the cumbersome and often error-prone task of managing multiple configurations. Its core philosophy revolves around flexibility and adaptability, allowing it to cater to a wide range of project requirements with ease.

At its essence, Hydra is a configuration framework that enables developers to create hierarchical configurations dynamically. It is primarily designed for Python applications but its principles are applicable in various programming environments. This flexibility makes Hydra particularly useful in scenarios where configurations vary significantly between deployments, such as in different stages of development, testing, and production.

Getting Started with Hydra

Hydra is a powerful tool that can be integrated into your development environment using either Conda or Poetry. To install Hydra, you can use the following commands based on the environment you are working with:

- If you're using Poetry, use the command:

poetry add hydra-core. - For those using Conda, the installation can be done via pip with:

pip install hydra-core.

Now, let's dive into the main features of Hydra and explore how it can enhance your project

Hierarchical Configuration Composable from Multiple Sources

For instance, in a project where we have to manipulate multiple configuration files, it is crucial to gain flexibility and scalability through configuration management tools.

Consider a scenario where we deal with several distinct configuration files:

- 1. model.yaml: Configures the model details.

- 2. dataset.yaml: Contains dataset-specific configurations.

- 3. training.yaml: Holds training parameters.

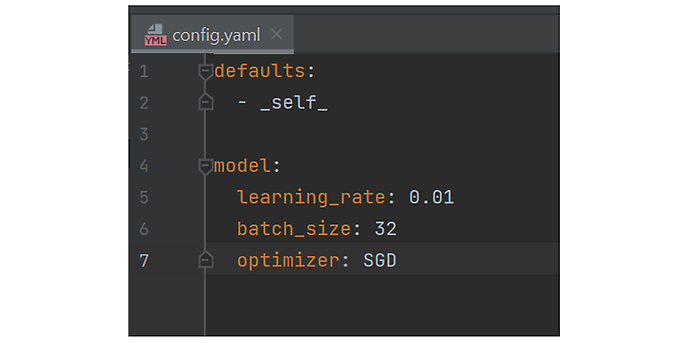

In such a scenario, we create a main configuration file, say config.yaml, which doesn't contain the actual configuration details but rather references to the specific configuration files like model.yaml, dataset.yaml, training.yaml.

‘’’Code

default:

- model.yaml: model

- dataset.yaml: dataset

- training.yaml: training

‘’’

In this example, we merge multiple YAML configuration files:

‘’’Code

import hydra from omegaconf

import DictConfig # add config path and config name with hydra

@hydra.main(config_path="project_config", config_name="app_config")

def my_app(cfg: DictConfig) -> None:

print(cfg)

if __name__ == "__main__":

my_app()

‘’’

@hydra.main(config_path="project_config", config_name="app_config")This is the main decorator function that is used when any function requires contents from a configuration file.- The current working directory has been changed.

main.pyexists insrc/hydra_demo/main.pybut the output shows the current working directory issrc/outputs/2024-01-28/16-22-21This is the most important point when using Hydra.

When we run the application with Hydra, it automatically merges these configurations into a single hierarchical configuration object. This merging is based on the paths provided in the config.yaml file.

For example, when your Python script initializes Hydra, it reads the config.yaml and automatically composes a configuration that includes settings from model.yaml, dataset.yaml, and training.yaml.

By enabling the seamless merging of multiple configuration files and offering features like dynamic working directory management, Hydra significantly enhances the flexibility and scalability of configuration management in complex projects

Configuration Specified or Overridden from the Command Line

In many AI projects, we face the same problem over and over again. AI projects often involve extensive experimentation with different parameters and settings.

One of Hydra's standout features is its command-line flexibility. It enables users to specify or override configurations directly from the command line, a feature that greatly simplifies managing different deployment environments and experimenting with settings without the need for code changes.

In our case, we want to classify images using a neural network. Our project (like any other AI project) involves experimenting with various parameters such as the learning rate, batch size, and the type of optimizer.

‘’’Code

model:

name: "ResNet50"

training:

batch_size: 32

learning_rate: 0.001

optimizer: "Adam"

‘’’

Typically, these parameters are defined in a configuration file. However, with Hydra, you can override these parameters directly from the command line, which is especially useful for quick experimentation and iterative testing.

Now, let's say you want to experiment with different learning rates and optimizers without changing the config.yaml file each time. With Hydra, you can easily override these parameters directly from the command line when you run your script.

For example, if you wish to set the learning rate to 0.01 and use the SGD optimizer, you can run your training script with a simple command line instruction like this:

python train.py training.learning_rate=0.01 training.optimizer=SGD

This command tells Hydra to override the learning rate and optimizer settings specified in your configuration file, allowing for rapid, flexible experimentation without the need for continuous file edits.

Dynamic Command Line Tab Completion

Hydra further simplifies configuration management with its dynamic command line tab completion. This feature aids in faster, error-free configuration adjustments. This is especially useful in complex applications like those involving AI projects where you might have numerous parameters and options.

When typing python train.py and pressing the Tab key, the completion system displays all possible parameters such as learning_rate and optimizer, and as you type learning_rate= or optimizer=, it suggests or auto-completes with commonly used values or those you've used previously, like 0.01, 0.001 for learning rates, or SGD, Adam, RMSprop for optimizers.

In practice, setting up such dynamic tab completion requires configuring your command-line environment (like bash, zsh, etc.) and ensuring your application (like Hydra in this case) supports this feature.

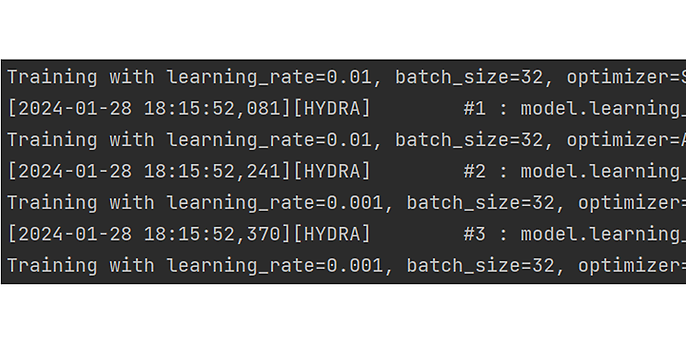

Running Multiple Jobs with Different Arguments

Hydra's multi-run capability is a major advantage for scenarios involving batch processing or comprehensive testing, as it streamlines running applications multiple times with varying configurations using just a single command. This feature is particularly useful when you need to evaluate different operational scenarios or conduct extensive parameter tuning.

For instance, when training a machine learning model, a YAML configuration file is defined. This file sets specific parameters such as learning rate, batch size, and the optimizer. These settings are crucial for the training process of a machine learning model. The ability to configure them easily and flexibly is essential.

This command will run four separate training jobs with combinations of the specified learning rates and optimizers:

1. Learning rate = 0.01, Optimizer = SGD

2. Learning rate = 0.01, Optimizer = Adam

3. Learning rate = 0.001, Optimizer = SGD

4. Learning rate = 0.001, Optimizer = Adam

Each of these runs will be executed consecutively with the specified pairs of learning rate and optimizer, showcasing how Hydra can effectively manage and simplify complex parameter tuning tasks.

The code is publicly available on https://github.com/azzabaatout/hydra-demo.git

Conclusion

Hydra's capacity to simplify complex tasks, such as running multiple jobs with varied parameters and its dynamic command line tab completion, highlights its role as an indispensable asset in modern software development. By offering a solution to the intricate challenges of configuration management, Hydra not only enhances productivity and reduces errors but also empowers developers to focus on innovation and the core aspects of their projects.

You can find more exciting topics from the world of adesso in our previous blog posts.