16. January 2024 By Azza Baatout and Marc Mezger

LLM operationalisation: a strategic approach for companies

Large language models (LLMs) such as ChatGPT, GPT-4 from OpenAI or Luminous from Aleph Alpha have become revolutionary tools in a world where artificial intelligence (AI) is rapidly gaining in importance. The challenge for companies is not only to utilise these models effectively, but also to integrate them into existing systems. This is where the concept of LLM operationalisation (LLMOps) – a specialised area dedicated to the management and optimisation of the lifecycle of LLMs and LLM-supported applications – comes in.

Why LLMOps is essential

The introduction of LLM-based applications in production environments poses major challenges for developers. Models such as GPT-3 from OpenAI with 175 billion parameters, Llama with 70 billion and Falcon with 180 billion illustrate the scope and capabilities of AI systems today. The successful use of these models requires a robust infrastructure that is capable of running several GPU-based machines in parallel and processing large amounts of data.

The specific challenges in the operationalisation of LLMs include:

- Model size and complexity: The considerable size and complexity of LLMs requires extensive resources for training, fine-tuning and provision for inference (the prediction or generation of responses).

- Data requirements: Training LLMs requires high-quality and extensive data sets, which can be challenging to obtain and process.

- Security and data protection: Strict security protocols are required when LLMs handle sensitive texts in order to ensure privacy and data integrity.

- Performance: Maintaining high performance with a high volume of users requires considerable computing capacity and expertise.

- Greater efficiency: LLMs can be made more efficient and effective with targeted prompt engineering, fine-tuning and the use of methods such as retrieval augmented generation.

The development of new tools and methods is essential to overcome these challenges. These support and manage the entire life cycle of LLM applications and enable the ground-breaking potential of these technologies to be realised in market-ready solutions.

Life cycle management of large language models

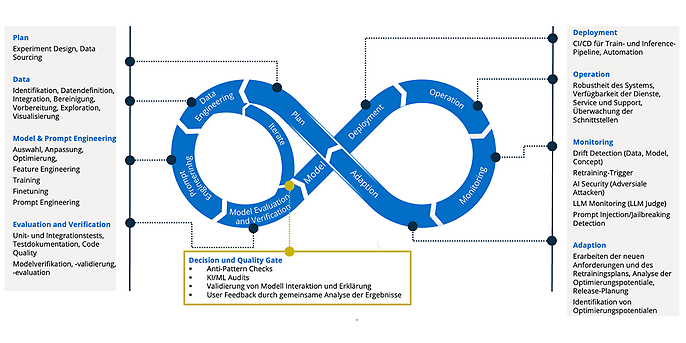

This diagram shows the adesso interpretation of the LLMOps cycle. It is strongly orientated towards the adesso MLOps cycle (defined by Robert Kasseck).

First planning phase

The conceptual orientation begins with the precise definition of objectives, the allocation of resources and the creation of detailed schedules for the development of LLM-based applications. This strategic planning forms the basis for the entire development process.

Data procurement and preparation

The core of this phase is the procurement and careful preparation of training or tuning data. This includes the collection, cleansing and visualisation of data that are crucial for the quality of the training process. As with traditional machine learning, data quality is crucial for excellent results with language models.

Model and prompt engineering

This phase involves fine-tuning the model to specifically optimise the training data and improve performance and accuracy. Prompt engineering is also part of this phase in order to steer the model in certain directions and optimise its reactions.

Evaluation and validation

After the model is trained, it is comprehensively evaluated to ensure that it fulfils the defined performance criteria and accuracy requirements. This includes quantitative methods such as calculating accuracy and the F1 score as well as qualitative approaches such as expert reviews and user feedback. The evaluation of an answer is not trivial, especially when it comes to language models. No metrics can be calculated for tasks such as summarisations or writing articles; very often an actual human being is needed for assessment.

Deployment

CI/CD pipelines enable seamless and automated deployment of models in the production environment. This also includes the provision of new model versions and updates.

Operation, monitoring and maintenance

Continuous monitoring is essential to ensure performance and consistent functionality. Recognising and preventing manipulation attempts is also a critical security aspect.

Iterative improvement (adaptation)

The final phase comprises the continuous adaptation and optimisation of the model based on user feedback and performance metrics. Regular updates adapt the model to changing data landscapes, user requirements and new scientific findings.

Differences between LLMOps and MLOps

LLMOps and MLOps have many similarities in model management. Yet, there are also important differences between the two areas:

- Specialisation in large language models: LLMOps focuses on the unique challenges and characteristics of large language models. In contrast, MLOps focuses on models that are generally smaller and are developed specifically for certain applications.

- Prompt management: LLMOps uses specialised tools that enable the tracking and versioning of prompts – an aspect that is not common in traditional MLOps practice.

- LLM chains: The sequencing of successive LLM calls is a characteristic element of LLMOps, which was developed to address the input limitations of large language models.

- Monitoring: LLMOps integrates monitoring and maintenance procedures that are specifically tailored to the needs of large language models. This is of crucial importance, given the complexity and high risk potential when evaluating language models.

- Fine-tuning and adjustments: Fine-tuning plays a more central role in LLMOps, although it is also part of MLOps. Companies tend to adapt existing LLMs instead of training them from scratch, as training basic models entails enormous costs and hardware requirements.

In summary, LLMOps is a specialised extension of MLOps that is tailored to the specific needs of large language models. This enables organisations to fully exploit the potential of generative AI.

LLMOps as pioneers of the future

The world of artificial intelligence is developing at a breathtaking speed, and large language models (LLMs) are at the forefront of this revolution. LLMOps is an essential part of this development and offers companies the opportunity not only to push the boundaries of technology, but also to set new standards for human–machine interaction. The challenges associated with the implementation and operation of LLMs are not insignificant, but they can be overcome through strategic planning, reflection on ethical aspects and continuous innovation. The operationalisation of LLMs offers immeasurable potential for increasing efficiency, customer loyalty and the development of new business areas.

The future of LLMOps is characterised by innovation and continuous development. Advances in AI research mean that LLMs are becoming even more powerful, versatile and efficient. We are at the beginning of an era in which AI is not just a tool, but an integral part of the value chain in companies. Operationalising large language models is more than just a technological endeavour; it is a journey into a smarter and more connected world. LLMOps is the linking element that turns the promises of the AI revolution into tangible reality. It is an exciting opportunity for companies and developers to be at the forefront of this ground-breaking change.

Would you like to find out more about the solutions and services adesso offers in the field of AI? Then take a look at our AI website or have a look at our other blog posts on the topic of AI.

You can find more exciting topics from the world of adesso in our previous blog posts.