29. February 2024 By Sascha Windisch and Immo Weber

Retrieval Augmented Generation: LLM on steroids

Vulnerabilities in the AI hype: when ChatGPT reaches its limits

Large Language Models (LLMs), above all ChatGPT, have taken all areas of computer science by storm over the past year. As they are trained on a broad database, LLMs are fundamentally application-agnostic. Despite their extensive knowledge, however, they have gaps, especially in highly specialised applications, which in the worst case only appear to be compensated for by hallucinations. This is particularly evident when highly specialised domain knowledge is to be queried, but in most cases, this is not part of the training data.

In mid-2023, a case in the USA caused a stir that highlighted the limits of ChatGPT and others. A passenger had sued the airline Avianca after being injured in the knee by a trolley on the plane. The plaintiff's lawyer referred to several previous decisions, six of which appeared to be fictitious. It turned out that the lawyer had asked ChatGPT about relevant previous judgements, but had failed to validate them. It turned out that ChatGPT had only invented these judgements. The knowledge queried in this case was so specific that it was not part of the data set used to train ChatGPT.

To nevertheless retrieve highly specialised domain knowledge using a large language model and reduce the risk of hallucinations, the AI framework of the so-called "Retrieval Augmented Generation" (RAG) has recently been established.

Where does RAG come from?

The term RAG goes back to the 2020 paper "Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks" by Lewis et al. The origins of the method even date back to the 1970s. During this time, scientists developed the first question-answer systems, in particular using natural language processing, albeit initially for very narrowly defined subject areas. In 2011, IBM's Watson question-and-answer system gained worldwide attention after it won against two human players in the quiz programme Jeopardy.

Traditionally, document content has been searched for using keyword searches, full-text searches or index and category searches. The main disadvantage of these classic approaches is that, on the one hand, the search term must be explicitly present in the document and, on the other hand, only explicit text segments from the document base can be reproduced. In contrast, RAG can also find semantically similar information and reproduce it in natural language.

Retrieval Augmenting Generation: How do LLMs become subject matter experts?

As the term "General Purpose AI" suggests, LLM should be able to be used for application-agnostic purposes. To this end, they are usually trained on an extremely large data set (GPT-4 uses 1 petabyte of training data). Despite this enormous size, however, in most cases, this data set does not contain any highly specialised domain knowledge.

However, there are three main approaches to utilising such domain knowledge using an LLM:

- 1. training of your model,

- 2. the refinement of an existing model and

- 3. the so-called "Retrieval Augmented Generation" (RAG).

In the first approach, it is of course possible in principle to develop an LLM from scratch and equip it with the required knowledge or train it. Another possibility in the second approach is to build on the existing knowledge of a model suitable for the use case and to retrain the domain-specific knowledge. While the two approaches are sometimes associated with considerable time and financial expenditure, the RAG is much easier to implement and is more than sufficient in most use cases.

How does RAG work?

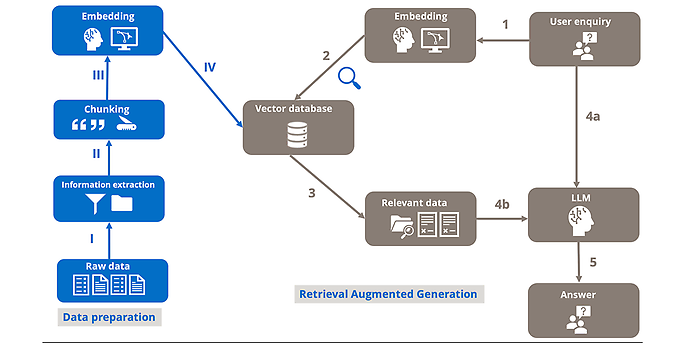

The RAG process can be roughly divided into data preparation and the actual user enquiry.

Data preparation begins with the collection of any raw data. This can be text documents such as PDFs or web pages, for example. In the first step, I) the relevant information is extracted from the raw data and II) broken down into text blocks of a defined size. The text blocks are then translated into a numerical form understandable for LLMs using so-called embedding and IV) stored persistently in a vector database. This completes the data preparation.

When a user submits a query to the RAG system, this is 1) also embedded first. Then 2) a similarity search (usually using the cosine similarity measure) is performed between the query and the documents stored in the vector database. The top and most similar text elements are 3) extracted and 4) passed to any LLM together with the user's request. This finally generates the response to the user enquiry based on the extracted information.

Process diagram of a RAG architecture

Which software tools can we use?

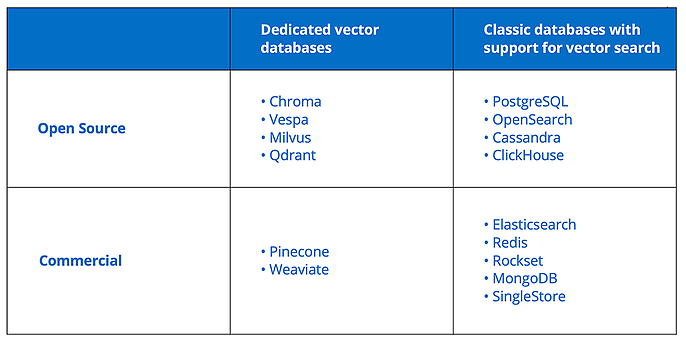

The main software components of a RAG architecture are the embedding model, the vector database and the actual LLM. Both commercial and open-source solutions are available for each of the three components.

The commercial model text-embedding-ada-002 from OpenAI or the framework from Cohere is often used for embedding larger blocks of text. The open-source framework Sentence Transformer (www.SBERT.net), for example, is suitable for embedding individual sentences. For an overview of the best current embedding models, we recommend the MTEB Leaderboard on Hugging Face (MTEB Leaderboard - a Hugging Face Space by mteb).

With the increasing popularity of RAG, the selection of providers of dedicated vector databases is also growing (Table 1). On the commercial side, the providers Weaviate and Pinecone should be mentioned here, among others. Among the open-source solutions, ChromaDB is frequently used. Other open-source alternatives are LanceDB, Milvus, Vespa and Qdrant.

However, traditional database providers have also recognised the potential of RAG and now support vector search. Here, too, there are both open-source (ClickHouse, Cassandra, PostgreSQL) and commercial solutions (Elasticsearch, Redis, SingleStore, Rockset, MongoDB).

Various commercial and open-source solutions are also available for choosing an LLM. Among the commercial solutions, GPT-4 from OpenAI and the Luminous models from Aleph Alpha are widely used. Like the various open source models, the latter have the advantage that they can also be operated completely locally. You can find an overview of the currently most powerful LLMs on this website: Open LLM Leaderboard - a Hugging Face Space by HuggingFaceH4.

Various software frameworks such as Haystack, LangChain and LlamaIndex simplify the implementation of a RAG architecture by abstracting and standardising the syntax of the various elements such as embedding models, vector databases and LLM.

Overview of vector database providers

What advantages do RAG-based systems offer us?

The advantages of RAG from the user's point of view are obvious.

- 1. As the example mentioned at the beginning shows, LLMs are usually trained on a broad knowledge base but have problems with domain-specific knowledge. This can be provided by RAG without time-consuming fine-tuning.

- 2. Another problem with large language models is that their available knowledge is more or less static. Users of ChatGPT knew this problem only too well until recently. Until recently, if you asked the model for the latest news, it usually replied that the knowledge only lasted until September 2021. With RAG, however, it is possible to supply the model with knowledge from external documents without retraining and thus keep it up to date. This means, for example, that the knowledge base of an LLM can be updated daily with company data.

- 3. This results in a further advantage: RAG is cost-efficient, as significantly more expensive new development, including training or fine-tuning of existing models, is not necessary. Finally, RAG also fulfils a core requirement for AI systems in application-critical contexts: transparency and trustworthy AI.

- 4. A RAG framework, as can be implemented with LlamaIndex, for example, allows the sources of the information output by the LLM to be referenced and thus made verifiable. As the example of the lawyer using ChatGPTs shown at the beginning of this article has shown, hallucinations of LLMs are a major problem.

- 5. Hallucinations can be largely avoided by configuring the RAG system, optimising prompting and referencing sources. This would have quickly made it clear that the six previous court judgements were just figments of the AI's imagination.

What are typical use cases?

A typical use case is "chatting" about complex internal company manuals or guidelines, such as travel policies. As these are generally relatively complex and usually lead to a high volume of enquiries in support, RAG can be used to answer specific questions from employees. Instead of laboriously searching for specific information in the document themselves, employees have the opportunity to address their questions directly to the document in everyday language.

The advantages of RAG are also evident in areas of application that are geared towards continuous updating. For example, a RAG agent in the sports sector could inform users about the latest match results or match progress. While a single LLM would have to be refined at great expense, in a RAG architecture only the embedding of the new information is necessary.

Let's get started - First steps into the RAG world!

This chapter will demonstrate the practical use of RAG using a few examples. To keep the examples manageable, we will not include typical components of a production system, such as user authorisations or the handling of exception and error situations.

For the process of using various tools and language models in the context of RAG described above, libraries have been developed over the past few months that abstract the process chain and thus offer relatively simple and standardised access to the various tools.

This makes it possible, for example, to change the language models from licence models to open source to on-premises via configurations without having to adapt the source code.

At the time of writing this blog entry, the best-known libraries are LlamaIndex, LangChain and Haystack. All three are available as open source.

For this blog series, we start the examples with LlamaIndex.

LlamaIndex is an open-source framework that enables developers to connect large language models (LLMs) with external tools and data. It offers a range of functions that simplify the development of LLM applications, including standard interfaces

- for data acquisition and processing

- the indexing (embedding) of data in various vector databases

- for the various query interfaces, such as question/answer endpoints or chatbots.

A Python environment, such as Anaconda, is required for the practical comprehensibility of the examples.

LlamaIndex is installed using the command:

pip install llama-index

For better traceability of the internals, we recommend activating logging.

The following imports and configurations are used for this purpose:

import logging

import sys

# Logging Level hochsetzen, damit wir alle Meldungen sehen.

logging.basicConfig(stream=sys.stdout, level=logging.DEBUG)

logging.getLogger().addHandler(logging.StreamHandler(stream=sys.stdout))

The only other two imports we need for a first example are

from llama_index import SimpleDirectoryReader, VectorStoreIndex

With the SimpleDirectoryReader, we use a component that reads data from directories and returns it as document objects. With the VectorStoreIndex, we get a powerful component that embeds the documents on the one hand and generates an index on the other, which we can then use to formulate our question. In our example, the "data" subdirectory contains any text files that we import for the vector database.

#Create the index from the documents.

documents = SimpleDirectoryReader("data").load_data()

index = VectorStoreIndex.from_documents(documents)

The index object with the stored embedding data only exists here in the computer's main memory. We can now create a query from the index object and output the result:

#The index has been loaded and can now be used

query_engine = index.as_query_engine()

response = query_engine.query("Was ist generative KI?")

print(response)

To summarise, we can say that a RAG process with LlamaIndex can be developed and executed with just five lines of code.

A second example shows how we can read a previously created index from the hard drive or persistently save a newly created index.

StorageDir = "./storage"

if os.path.exists(StorageDir):

#Load the existing index.

storage_context = StorageContext.from_defaults(persist_dir=StorageDir)

index = load_index_from_storage(storage_context)

else:

# Create the index .

documents = SimpleDirectoryReader("data").load_data()

index = VectorStoreIndex.from_documents(documents)

# Save the Index.

index.storage_context.persist(persist_dir=StorageDir)

From here, the index object can be used again.

What happens next?

This blog post only provides an initial overview of the topic of LLM. In further blog posts, we will explore the topic in more depth and, among other things, discuss the use of local RAGs for different specialist areas or more specialised areas of application. For example, RAG can also be used via Text2SQL queries to submit queries to data warehouses in natural language. There are also numerous ways to improve the conventional RAG architecture using special techniques such as RAG Fusion, HYDE, Context Expansion or Query Transformation.

Would you like to find out more about exciting topics from the world of adesso? Then take a look at our previous blog posts.