12. January 2024 By Christian Hammer

The EU AI Act: shaping Europe’s regulation on AI at the end of 2023

The negotiations on the EU AI Act came to a favourable end with a provisional agreement between the European Parliament and the European Council after tough negotiations, essentially as the year 2023 came to a close. There has long been a debate about what all falls under the term artificial intelligence and whether, for example, systems, technologies or use cases should be categorised into risk levels. Following these and various other fundamental decisions, a whole series of details had to be clarified. In particular, there were many discussions on the unacceptable risks (highest risk level) AI systems and the high-risk AI systems. In part, national interests were at the forefront here, as the ban on real-time biometric identification in public spaces meant that some counter-terrorism practices were no longer permitted, but in part the development of certain technologies also made a categorisation necessary: how should so-called ‘foundation models’ or generative AI be handled?

The new law now includes the safe handling of general-purpose AI (GPAI), the criminal prosecution (and thus only one limitation) of biometric identification systems and a straightforward ban on social scoring and manipulative AI. In addition, citizens are now granted the right to take legal action against AI systems or to have AI-based decisions explained to them. The amount of fines to be expected for compliance violations has also finally been set: from 7.5 million euros or 1.5 per cent of the company’s national annual turnover up to 35 million euros or 7 per cent of the company’s global turnover.

Risk levels established by the EU AI Act

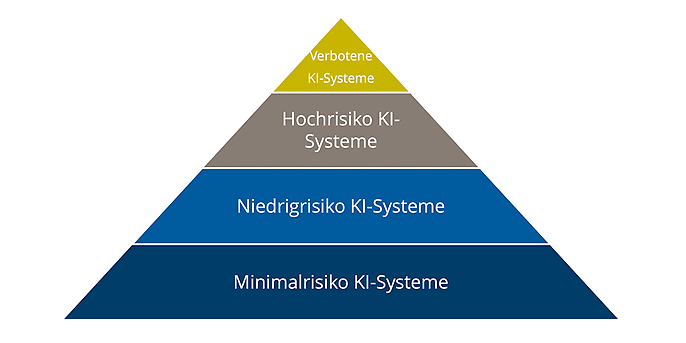

In addition to these guidelines, however, the EU AI Act focuses on the risk levels and how to deal with them. The following illustration shows the different levels:

Risk levels established by the EU AI Act

The minimal-risk/no-risk AI systems at the lowest level apply AI in a very narrowly defined area that lies within the main area of responsibility of the AI system provider. This could be AI support in a video game or an administration system, for example. This part of AI is not explicitly regulated by law and is therefore not subject to any special regulations.

At the next level up, the limited-risk AI systems, people are already interacting directly with an AI, as is the case when using chatbots, for example. This is where the act comes into play for the first time and obliges the provider to be transparent (see Art. 52 – Transparency obligations for certain AI systems). Accordingly, natural persons must be informed that they are interacting with an AI system, especially if methods such as deepfaking are used.

The high-risk AI systems are the actual focus of the EU AI Act, which regulates them comprehensively and imposes far-reaching obligations on the various players. These are divided into eight areas, which are listed in Annex III – High-risk AI systems referred to in Article 6(2):

- 1. Biometric identification and categorisation of natural persons

- 2. Management and operation of critical infrastructures

- 3. Education and training

- 4. Employment, workers management and access to self-employment

- 5. Access to and enjoyment of essential private services and public services and benefits

- 6. Law enforcement

- 7. Migration, asylum and border control management

- 8. Administration of justice and democratic processes

Strict requirements must be met for AI systems that fall into at least one of these areas, such as risk and quality management, documentation, data quality management and data governance, processes and methods to prevent discrimination, traceability, transparency, but also robustness, security and accuracy.

The highest level is the unacceptable-risk AI systems. These are practices that must be categorised as a threat to security and human rights. This includes the aforementioned social scoring, but also all subliminal or exploitative techniques for manipulating behaviour.

Conclusion

The EU has developed a legislative proposal with the proposal of 9 December 2023 that is unique to date, but that, like the GDPR, will be imitated worldwide (the Brussels effect – see [4]). On the one hand, the law will protect the rights of citizens and at the same time promote innovation through various enshrined measures and, on the other hand, enable trust in AI systems through transparency and traceability. The EU AI Act is to come into force in the EU from 2026.

Would you like to find out more about exciting topics from the world of adesso? Then take a look at our previous blog posts.

Would you like to find out more about the solutions and services adesso offers in the AI environment? Then take a look at our AI website or have a look at our other blog posts on the topic of AI.