27. November 2023 By Azza Baatout

Why traceability is important in artificial intelligence

In the world of artificial intelligence (AI), there is the widespread problem known as the black box dilemma. AI practitioners are faced with the challenge of how to shed light on the opaque decision-making processes involved with AI systems. This problem relates to the fact that AI models are often seen as opaque ‘black boxes’ which are difficult to understand in terms of how they work.

Traceability is a solution to this dilemma, as it allows users to track the predictions and processes of an AI. This includes the data used, the algorithms employed and the decisions made. Traceability is critical to increasing trust in AI systems and upholding ethical standards.

One of the basic functions of traceability is to document the origin of data, processes and artefacts that play a role in the development of an AI model. To give an example, a neural network designed to generate art may contain copyrighted images in its training data. Without traceability, it would be next to impossible to localise and remove these data points, which could raise legal issues, such as copyright infringement.

Why a traceability system is indispensable in the field of AI

The potential as well as the challenges relating to AI are both equally interesting topics. AI systems are having an impact in more and more areas of our daily lives, ranging from healthcare and logistics to finance and education. However, this growing presence of AI in our lives also raises questions that go beyond the mere implementation of algorithms and models. One of the key questions here is: why is a traceability system so important in AI? I will be basing my thoughts and ideas on this topic on the scientific article titled ‘Traceability for Trustworthy AI: A Review of Models and Tools’ by Marçal Mora-Cantallops. This article stresses the importance of traceability as a key requirement for trustworthy artificial intelligence. This relates to the need to ensure a full and complete record of the origin of data, processes and artefacts involved in the production of an AI model. In this blog post, I will explore the central role of traceability in AI projects and why ensuring transparency, ethical standards and accountability in AI development is not just desirable but necessary.

- 1. Transparent decision-making processes: Traceability in AI systems brings transparency to decision-making processes. This means that developers and users are able to understand how an AI system arrived at a particular conclusion or recommendation. This is particularly important in critical applications such as in medicine, where it is necessary to explain why an AI system made a particular diagnosis. Transparency promotes trust in AI and makes it possible to reveal potential biases or undesirable effects in decisions.

- 2. Ethics and responsibility: Traceability plays a key role in upholding ethical standards in AI. It makes it possible to document the origin and history of data and models to ensure that no discriminatory, unethical or unlawful decisions are made, allowing developers and organisations to take responsibility for the actions of their AI systems and comply with ethical standards.

- 3. Compliance and regulation: AI systems are subject to increasingly strict legal requirements and regulations, particularly with regard to data protection (this includes the GDPR) and security (one such example here is the EU AI Regulation). Traceability helps companies and organisations to comply with these regulations. It enables every step in the AI development process to be tracked and documented, which proves particularly helpful when carrying out checks and audits. This can help a company minimise its legal risks and avoid penalties.

- 4. Building trust: Traceability systems are key to creating trust in AI systems. If users and stakeholders understand how AI decisions are made and how data is used, they will be more willing to accept and utilise AI systems. Trust in AI is critical to ensuring its acceptance and the long-term success of AI projects.

Let us take a closer look at traceability tools

Concepts such as traceability, repeatability and reproducibility play a decisive role. Traceability makes it possible to clearly document the origin and evolution of data and processes, while repeatability ensures that an experiment can be repeated under the same exact conditions. Reproducibility, on the other hand, guarantees that other researchers can achieve similar results by conducting the same experiment with stand-alone data sets.

Material published across a number of data repositories often poses a challenge in terms of repeatability or reproducibility. The main problem is that the necessary software and system dependencies required to execute the code are not adequately mapped. Even if researchers have left notes or instructions, they often lack the necessary context or insights into the workflow. As a result, it is either practically impossible to run the code or this requires a lot more work to do this.

Storing code and data on personal websites or in repositories such as GitLab or GitHub often proves to be an ineffective strategy, since in most cases key information is missing, including the runtime environment, context and system information.

Many online tools that have come onto the market are built on cloud storage and containerisation technologies such as Docker. These tools all share the goal of fully mapping the environments in which research is conducted in order to make the entire research process reusable, shareable and ultimately reproducible. The most comprehensive of these tools primarily cover the technical aspect of reproducibility, including the environment, code, data and information on the origin.

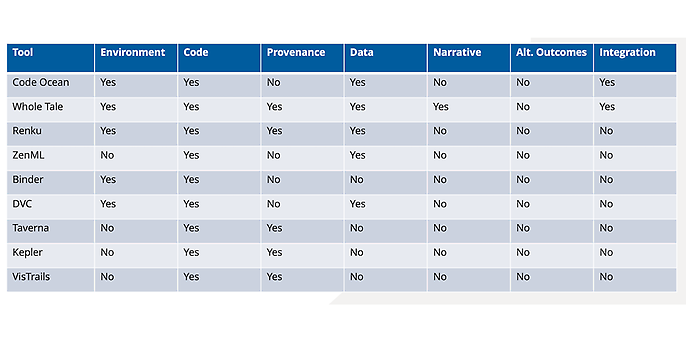

Comparison of tools used to support reproducible research methods, Source: ‘Traceability for trustworthy AI: A Review of Models and Tools’ by Marçal Mora-Cantallops.

An oft-neglected aspect of many tools is the documentation of narratives. This involves documenting the code in text form and the textual elements that led a developer to decide in favour of certain tools and workflows over others. Having detailed information about the researchers’ motivation for selecting a particular collection of data and understanding the reasoning behind the structure and testing of the model are critical when it comes to the transparency and reproducibility of the methods.

There are tools such as Code Ocean, Whole Tale and Renku, which are highly focussed on the principles of reproducibility. These platforms offer functions and resources that make it easier for scientists to make their research results and methods transparent and reproducible for others. They assist in documenting narratives and enable the end-to-end traceability of the research process, helping to increase transparency and trustworthiness in AI research and ensuring the repeatability and reproducibility of methods.

To give a specific example, the Binder tool focuses on providing an environment in which code can be executed. The situation is similar with other tools that are not shown in the table, such as OpenML and Madagascar, while most other tools focus on providing pipelines to make experiments repeatable. It is also important to emphasise that in most cases the inability to reproduce the experiment under the same running conditions and at the same location as the original presents a risk in terms of replicability as well as repeatability.

Despite the long list of tools shown above and the views available with all tools to enable reproducible research, the analysis of their listed features (whether on their websites or in promotional materials) shows that most of them are still far from fully complying with the requirements set out in the traceability guidelines issued by AI HLEG. The reproducibility (or replicability) of methods is also often insufficiently taken into account.

However, it is worth noting that some of the tools analysed in the underlying scientific publication are no longer actively supported or updated. For example, VisTrails has not been maintained since 2016, and the current version of Sumatra (0.7.0) dates back to 2015. This means that outdated tools may pose a risk to the reproducibility of research methods just as much as the loss of methods or data.

Outlook

In this exciting journey through the world of traceability in AI projects, I have given you an inside look into the challenges associated with the black box dilemma and explained the central role that traceability plays in the transparent and ethically responsible development of AI systems. However, there is still a way to go on the road to transparent AI research. While there are already great tools available that support the technical aspects of reproducibility, the comprehensive documentation of narratives and motivations remains the main focus of further development work. Bridging the gap between code and the decisions that underlie this will be critical to ensuring that AI research is not only reproducible but also comprehensible.

In the next blog post, I will dive deeper into traceability tools that show great promise with you and go beyond theory by showing practical applications in AI projects. I will also be presenting a demo to show how these tools not only support the technical side of research, but also how they can be used in practice.

Would you like to find out more about exciting topics from the world of adesso? Then take a look at our previous blog posts.